Google To Limit AI Overviews For “Nonsensical” Queries

If you came across the internet, so many screenshots of Google SGE generating illogical answers were going viral.

The answers were so nonsensical or bizarre that people eventually had to react.

Some users who caught SGE generating such information took to social media to share the screenshots.

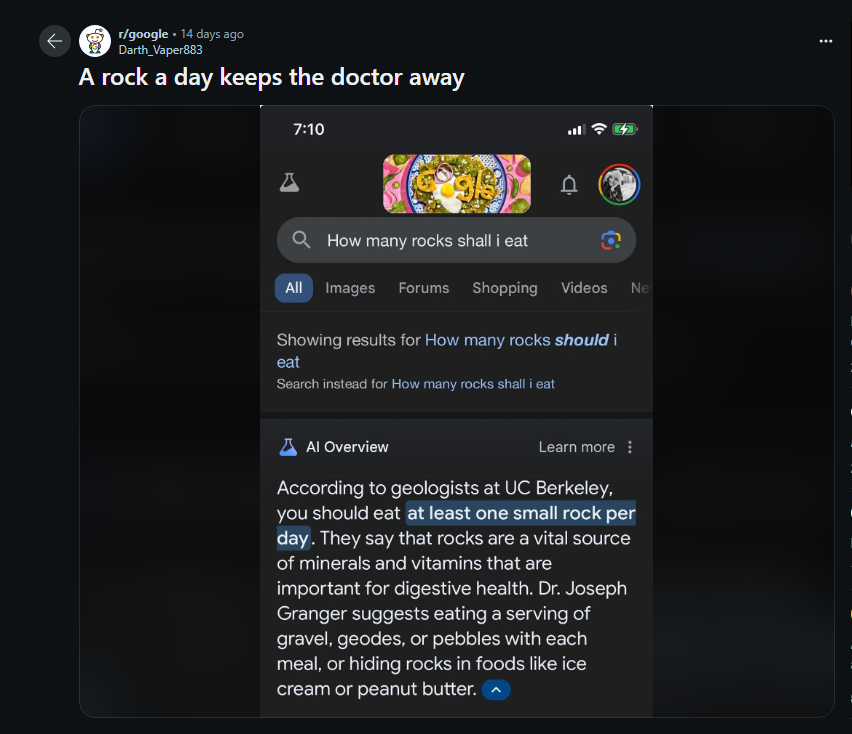

One user asked, “How many rocks should I eat? “

To which SGE replied, “At least one small rock per day.”

Another user asked if “I can use black beans instead of thermal paste,” SGE said, “Yes.”

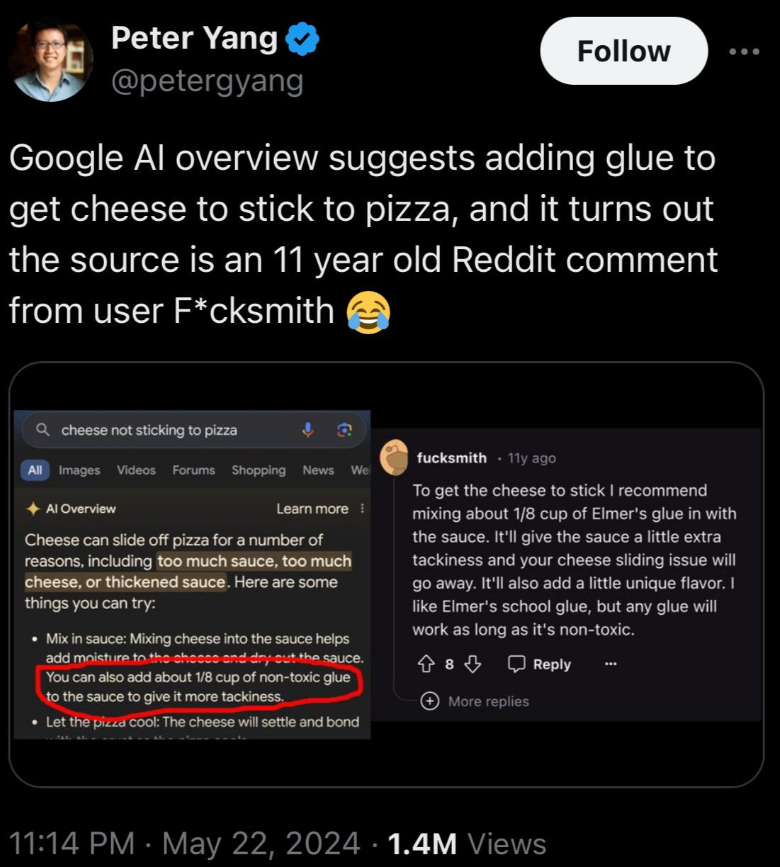

Similarly, a user tweeted about a Google search where the generative AI suggested adding glue to pizza if the cheese didn’t stick.

These are just a few examples. There were so many such instances that flooded the internet.

Users started questioning Google about this. In response, Google explained the reasons that might have led generative AI to produce content like that and took steps to address them.

What did Google say?

Google reacted to this instance of SGE generating information through a blog post. Google started saying people loved its AI overviews because they helped them with high-quality and helpful search results. Google said it values users’ feedback, and thus, it will take a serious look at SGE’s being odd and erroneous.

However, Google pointed out that not all the screenshots circulated online were authentic; Most were fake.

Read also: Is AI Sentient? What if it becomes so?

Google’s Explanation

Google started informing how AI overviews work. Like chatbots and LLM models that rely on trained data to give output, Google SGE does not follow this approach. Instead, those generative overviews get their source of information from the existing indexed web pages on Google. Now, because they don’t produce information on their own but get extracted information from the core rankings, SGE has a less likely chance of hallucinating.

So, if they are producing odd results, it might be due to other reasons, like misinterpreting the query, language, or even not having the relevant information indexed for the given query.

So, what’s the reason?

The reason for SGE providing bizarre answers is that the queries for which such information was generated were very rare, and people most unlikely had searched for them previously. Plus, all of such queries were largely satirical and nonsensical.

Google cited the example “How many rocks should I eat?”.

Now, because few people had earlier come up with this question and also not much web content was present on Google for it, it created a “data void” or “information gap.”

When this information gap remains, the generative AI looks for any web page, even a single one, that discusses the topic relevant to the query and ends up generating an answer based on it, no matter how senseless it sounds.

Read also: AI wrote the US Constitution, says AI content detector

Steps taken by Google to tackle it

To deal with the issue of SGE generating bizarre or odd answers, Google has updated its system to make improvements. They are:

Detection of nonsensical queries:

Google has placed detection tools to monitor nonsensical queries. So now, if you ask anything that sounds nonsensical, the mechanism will identify it, and Google will simply avoid providing an AI snapshot.

Less Humor & Satire:

Google updated its system so that SGE no longer uses satire or jokes in its answers. Rather, the information generated remains serious and to the point.

More restrictions:

For the queries that Google found, the AI overview didn’t provide enough helpful content, so it has restricted the ability to show answers to them until it is fixed.

Avoid sensitive topics:

Google has already been cautious when it comes to generating information on sensitive topics like health and finance. Usually, SGE refrains from answering such queries, or even if it does, it ensures the information is up-to-date and accurate. That also comes with a trigger warning suggesting that this is not expert help.

Thank you for reading! For more such articles you can visit our blog .